Why Feature Reviews Matter (and How We Ran Them at Palm)

At Palm Computing, we worked against hardware manufacturing schedules that made software deadlines absolute. To avoid the dreaded "black box" of development where stakeholders only see the result at the bitter end, we implemented Feature Reviews. These regular check-ins did more than just report status; they forced transparency, mitigated risk, and became a crucial tool I still use with my teams today.

Origins at Palm

First, a little background on the origin story. [Palm][] was a hardware company which meant most projects were driven by the manufacturing schedule. In this environment, one key learning for software teams like mine was that schedule is not going to slip. It was a good forcing function though; whatever software was ready by the cutoff date, that's what we shipped with. We could do patches later, but everything really had to be done.

This meant we were essentially following a waterfall process even for software and even with our attempts to make our project more incremental (pre-agile). Our solution was to add some intermediate progress updates which we'd share across teams and with our engineering and product leadership. We wanted to combat this waterfall idea of a long development time being a "black box" until the result popped out at the end:

It's a simple concept - just add Feature Reviews as intermediate checkpoints along the way:

These reviews were useful for bringing together the right set of peers and leaders, to report in a sense "here's what we're building." I remember we fine-tuned them to just cover the most impactful or risky projects, especially those that had both desktop and handheld components. Hosting these reviews regularly also helped in cases where we needed to make course corrections or scope reductions to ensure we would achieve the deadlines.

Fun fact #1: While writing this up I remembered something about life on a software team at a hardware company. All our project end milestones were "GM" (Gold Master), because our code was literally going to be cut to a master CD-ROM or master device ROM. Everywhere I've worked since has really called it "GA" (General Availability).

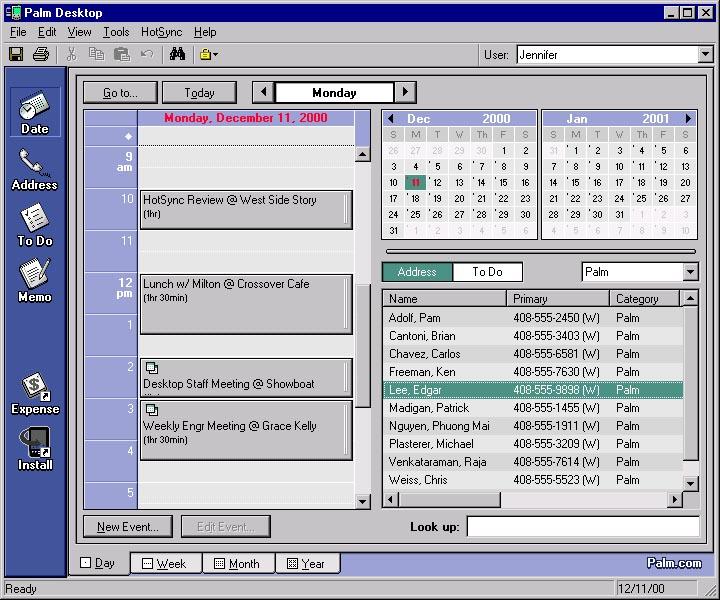

Fun fact #2: My team owned the desktop applications including Palm Desktop, and we liked to include easter eggs in the product to list all the people who built it. We even got our names into some official product marketing screenshots!

How to run a feature review

Even in an agile enterprise software environment like Tableau, feature reviews can still be valuable. Most agile teams already have sprint reviews, but feature reviews can include a broader audience, look at a longer horizon, and be more feature-centric.

Here's my suggested outline for holding a feature review, including the audience and what you should cover:

- Owner - Prepared and led by the feature team (engineering & product).

- Audience

- Stakeholders (including leadership, architects, field)

- Peer and related teams

- Other interested people in the company; looping in those who are interested in what you're building that you want to keep informed and query for feedback

- Contents

- What are you building (setting context for those who aren't familiar)

- What's the status, show live demos

- What work is remaining (schedule discussion)

- What open questions or potential risks should be discussed

- Frequency - This will depend on your overall project timeline, but we found every 4 or 6 weeks to be about right. So out of a roughly 12-week release cycle, we'll meet 2 or 3 times.

- Logistics - Pay attention to the usual important details for a meeting like this, including advertising in advance, keeping the conversation focused, and capturing recording/slides/actions to share afterwards.

- Outcomes - The team should agree ahead of time whether the purpose is mostly sharing status, or whether there are any specific decisions you need. If the right stakeholders have been included, decisions and adjustments can be made during the review or at a minimum captured for offline resolution.

The review contents will likely change a bit as you make progress. The first review can focus more on requirements and planning, but the big value in future sessions is to show off what has been built so far. For one project I'm leading, our first review was more top-down (requirements, design, dates, who's working on it, etc.) with the demos at the end. Then we flipped it (bottom-line up-front style) and jumped right into the demos first with project status/logistics to follow.

As an engineering manager, there's a bonus for me here which is giving your team the spotlight and encouraging them to participate and drive the demos. Presenting your work is a great skill to have and these feature reviews can be safe spaces to practice.

Comparison to the movie business

I had a random thought comparing this idea of feature reviews for software projects to the "dailies" that are used in the movie business. AI chatbots tended to agree with me, no surprise there!

Copilot wrote this comparison:

Feature reviews in software projects and dailies in movie production both serve as regular, structured opportunities to review progress, engage stakeholders, and mitigate risks. The main differences lie in their frequency, scope, and the nature of what's being reviewed. Feature reviews are broader and less frequent, supporting complex, multi-phase software development. Dailies are immediate and focused, supporting the fast-paced, high-stakes environment of film production.

Comparison Table: Feature Reviews vs. Dailies

| Aspect | Feature Reviews (Software Projects) | Dailies (Movie Production) |

|---|---|---|

| Purpose | Share progress, surface risks, gather feedback, and keep stakeholders informed | Review footage for technical/artistic quality, spot issues early |

| Frequency | Periodic (every 4–6 weeks, or as fits project timeline) | Daily (after each day of filming) |

| Audience | Broad: feature team, leadership, architects, related teams, interested stakeholders | Core creative/technical team: director, cinematographer, producers |

| Content | Demos, status updates, remaining work, risks, questions, action items | Raw, unedited footage from the day’s shoot |

| Focus | Holistic project status and cross-team communication | Immediate technical and creative review of filmed material |

| Format | Meetings with presentations, live demos, Q&A, and documentation | Screening sessions, often informal, focused on viewing footage |

| Outcome | Feedback, action items, risk mitigation, improved transparency | Decisions on reshoots, technical fixes, or creative adjustments |

| Skill Development | Opportunity for team members to practice presenting and communicating | Opportunity for crew to refine filming techniques and performance |

And ChatGPT nailed it with this generated visual comparison ☺